Anonymous website

Control4D: Dynamic Portrait Editing by Learning 4D GAN from 2D Diffusion-based Editor

Anonymous Submission

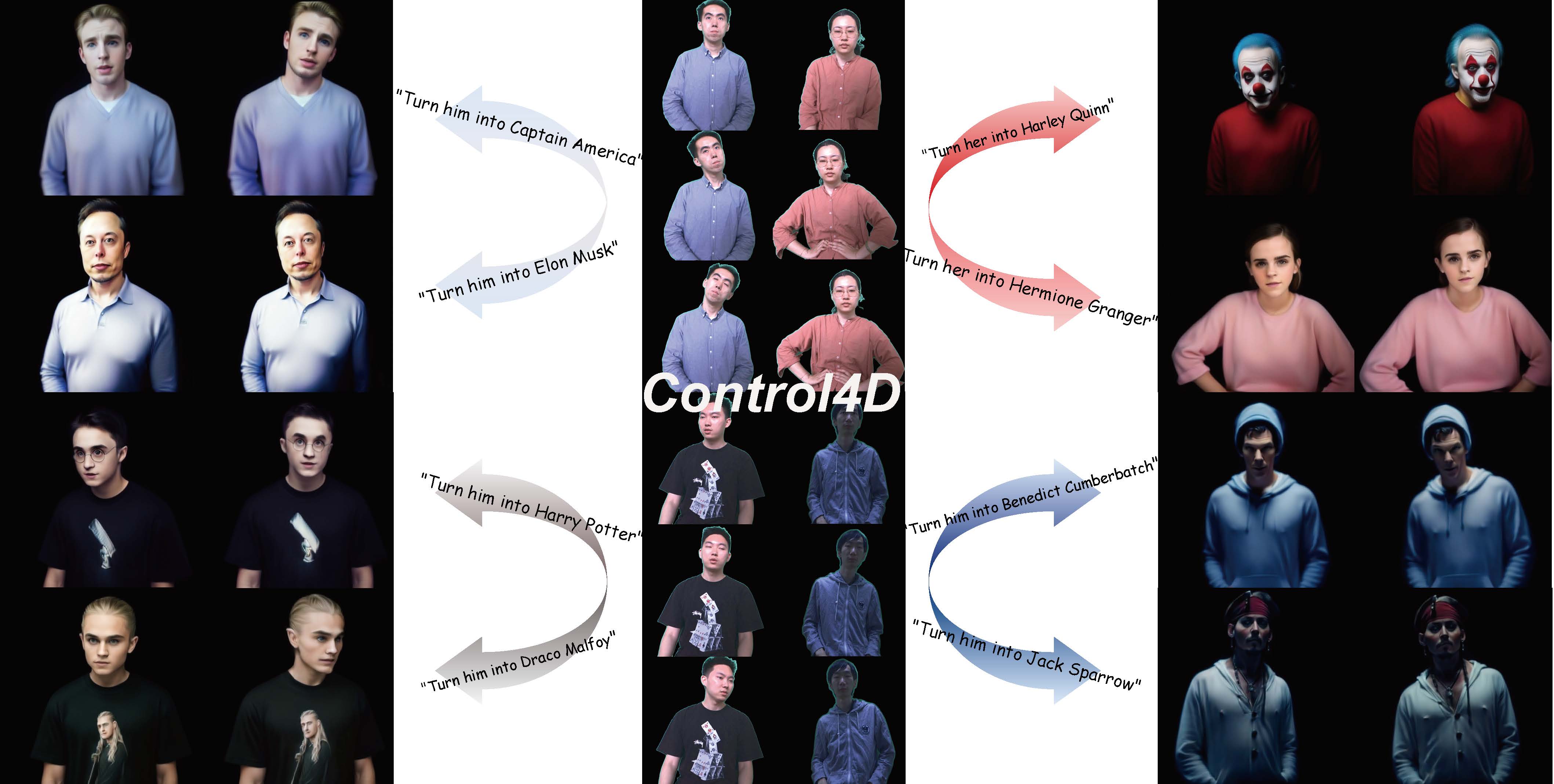

Fig 1. We propose Control4D, an approach to high-fidelity and spatiotemporal-consistent 4D portrait editing with only text instructions. Given the video frames in the middle and text instructions (around the arrows), Control4D generates realistic and 4D consistent editing results shown on the two sides.

Abstract

Recent years have witnessed considerable achievements in editing images with text instructions. When applying these editors to dynamic scene editing, the new-style scene tends to be temporally inconsistent due to the frame-by-frame nature of these 2D editors. To tackle this issue, we propose Control4D, a novel approach for high-fidelity and temporally consistent 4D portrait editing. Control4D is built upon an efficient 4D representation with a 2D diffusion-based editor. Instead of using direct supervisions from the editor, our method learns a 4D GAN from it and avoids the inconsistent supervision signals. Specifically, we employ a discriminator to learn the generation distribution based on the edited images and then update the generator with the discrimination signals. For more stable training, multi-level information is extracted from the edited images and used to facilitate the learning of the generator. Experimental results show that Control4D surpasses previous approaches and achieves more photo-realistic and consistent 4D editing performances.

Static Scenes

Dynamic Scenes